Summary: Wanted to do some testing on OAC behavior with large lists of data. So, I downloaded last 3 (and later last 5) years of NY Yellow taxi data to Object Storage on OCI (cloud.oracle.com) got curious about performance of the loading so tested it with 6,4 and 2 OCPUs of ADW (Autonomous Database). In each case we have the data loading time and the "Select count(*) from table;" time. I used only the default features of the UI and tested time only once. Next, I did some testing with OAC (Oracle Analytic Cloud).

The Data

I used the data from www.nyc.gov site (link). Used only the Yellow Taxi Trip records. The files are in parquet format. Uploaded it to my OCI (Oracle Cloud) bucket storage into a Data folder. Preferred not to make it public but rather create a PAR (Pre-Authenticated Request) for the entire Bucket for limited time:

Copied the created link and used it in the next step.

(I could work properly and define the URI in "Cloud Locations", but I will do it "quick and dirty" this time.)

Loading 92 Million records

On OCI a have a small (2 OCPUs) ADW instance. It is a managed instance of the DB. The smallest possible is 1 OCPU. (Actually, there is a smaller option when using it as always free - see here for details). In the Database interface selected "Database Actions" and there the Data Load option:

The "Data Load" let's me select few options:In my case the data is on Cloud Storage, and I can select to Load the data to the Database or Link Data and create an External table. Usually, the link option is very fast for table creation and slow for execution. It is good for data we rarely access and don't mind it to be a bit slower on execution.

In the loading screen I pasted the previously created PAR URL and dragged the entire DATA folder to the right:

Answered Yes to the question (and renamed the table from default to ytaxi100ext. Pressed Start and had an External table of almost 100 million rows created in 14 seconds.This is amazing time but the price comes with the query performance, 13.324 seconds for select that scans the entire table:

Please note the external table is based on the *.parquet files in the DIR and not specific named files. It's great since we are in a dynamic content case.

I was a bit worried about data loading, so I temporally scaled the ADW to 6 OCPUs (the payment granularity for ADW is by second, as a result, short scaling up is not that expensive):

This time I selected "Load Data" in the wizard:

The loading time was less than 2 minutes (1:56), and the select statement finished in 0.114 seconds.

| OCPU | Loading time | Select time |

| 2 | 4:38 | 0.138 |

| 4 | 2:33 | 0.112 |

| 6 | 1:56 | 0.114 |

Loading ~280 Million records

I was positively impressed by the numbers, so I uploaded 2 more years of data. Apparently, the taxi business was better in the years 2018 & 2019. Now I had 4 GB of parquet files with almost 280 million rows.| OCPU | Loading time | Select time |

| 2 | 14:34 | 0.205 |

| 4 | 7:02 | 0.311 |

| 6 | 5:02 | 0.268 |

Working with the data in OAC (Oracle Analytic Cloud)

To do it I enabled the OAC developer mode that shows me detailed performance data and dragged to the canvas the "Pickup date" and "Total_amount" columns. In the top part of the screen there is the resulting graph and at the bottom the performance timing and graph:

Now we can see 2 things:

1. there is garbage in the data, it should be for years 2019-2022 and not 2001-2097.

2. The total query time is less than 9 seconds, most of it in the DB.

To fix the first problem I'll filter the years. In the "Pickup Date" column automatic date hierarchy is created. I'll use the year level from there as filter.

Examining the chart, we see that the drop at Mar-2020 in the Total_amount is astonishing. The taxis business didn't fully recover since.

Let's zoom on it. The drop from ~ $5,000,000 a day to about $100,000 in few days is unbelievable.

Let's add a polynomial trend line and a median reference line.

What about the split by Day of Week? A regular graph that will show DOW by Year and Month doesn't help much:

I will split the 3 years into the following groups:

- first 2.5 month of 2020

- next 4.5 month of 2020

- and the rest.

Let's check the day of week breakdown between them. Top chart is 100% stacked bar, since I want to compare percentage and not absolute values:

As we can expect there is a decline in percentage of Saturday income at the peak of Covid, (9.94%, comparing to 13.33% before and 13.93% after). Interesting that there is a hike in Monday income share (16.57% comparing to 12.37% before and 13.23% after).

One more thing I wanted to check is the capability of searching in filters with many millions of distinct values. That is why I created the string datetime column. We can see that 99.8% of the values in the column are unique. That is over 91 million unique values.

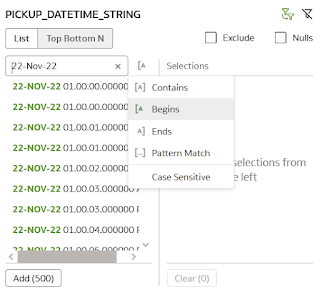

By default, the filters brings values in groups of 500, the search is not case sensitive and the default search is "Contains", while we can select other option, even cools ones such as Pattern Matching:

I'll try searching for columns that begins with "22-Nov-22", that are near the end of the list and got the relevant values within few seconds. Impressive!

Or with Pattern Matching, in this case, I was looking for mid-June or July 2020, at AM hours (the search string 1?-JU?-20*AM):

By the way, if you are worried about performance, it's improving once the results are cached:

One last thing...

What about the performance of the 280 million rows table, you might ask? Well not bad at all, especially, considering the fact that my queries force it to run on the entire table.

Here is the first run without cache:

And here it is with the Cache:

Or various modifications of filters after the first run:

Great performance.

Do you want it event better? Hope to cover it in the next post.

P.S.1

"Do as I say and not as I do" ... We should refrain from using Admin schema for actual work...

P.S.2

What I did here on Oracle Cloud with ADW and OAC can be done on premises as well, with the equivalent tools - Oracle DB EE and OAS (Oracle Analytic Server.)

No comments:

Post a Comment